Key Takeaways

- AI decodes visual rhythms: A new algorithm identifies patterns in eye movements and visual focus to screen for ADHD traits in adults.

- Faster, less intrusive diagnosis: The tool streamlines assessment, removing the barriers of paperwork and subjective self-reports.

- Tailored for neurodivergent minds: Early testing indicates the AI is especially effective in populations often missed by standard diagnostic systems.

- Potential to empower professionals: Fast, tech-driven screening allows entrepreneurs and creatives to harness insights for personal growth and workflow improvement.

- Next step: wider rollout. Research teams plan to expand trials, aiming for broader integration in digital health platforms later this year.

Introduction

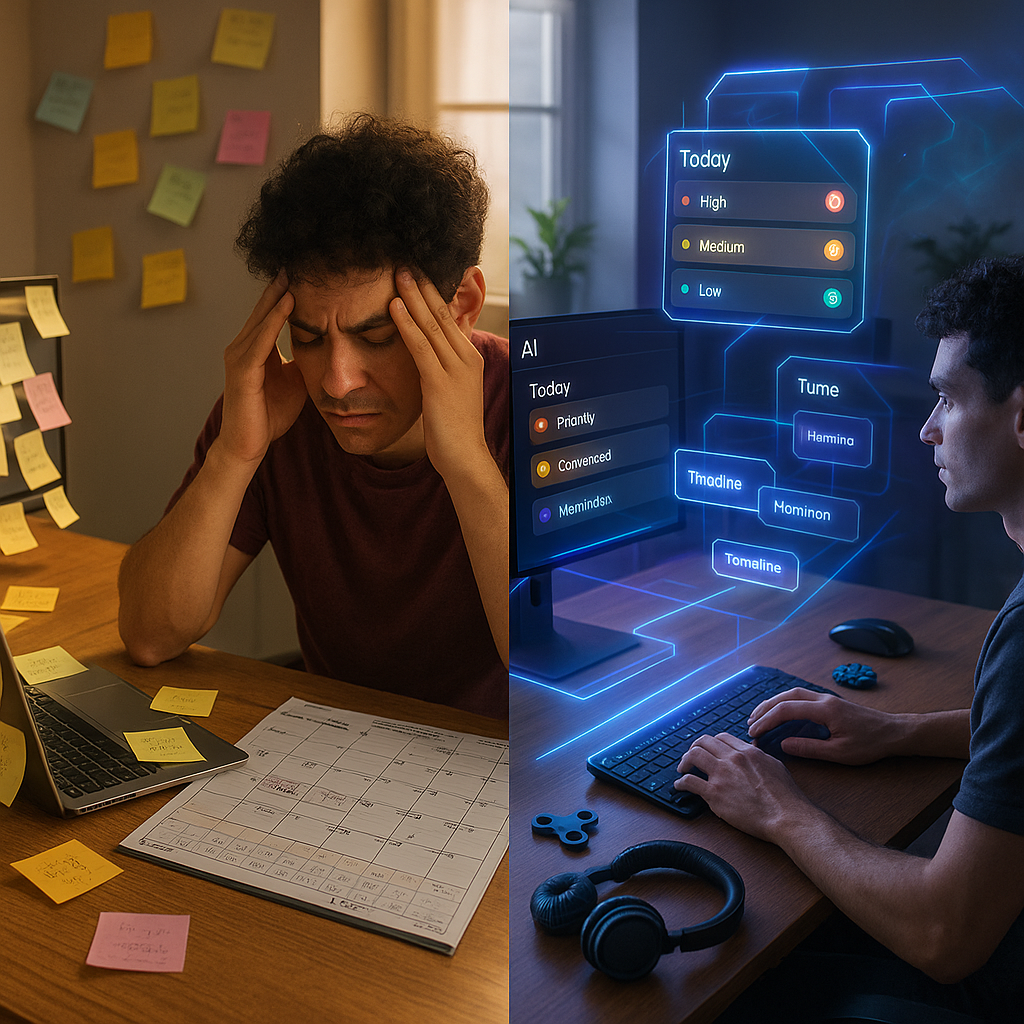

A new AI tool introduced this week by researchers can now screen for adult ADHD by decoding visual rhythms such as eye movements and engagement patterns. This approach bypasses the traditionally slow, paper-heavy diagnostic process. The innovation could offer neurodivergent professionals and creatives a faster and more empowering route to self-understanding and productivity, with plans for broader digital health integration by year’s end.

How the AI Tool Works

The AI system detects adult ADHD by analyzing subtle patterns in users’ visual engagement with digital content. By tracking micro-movements in eye gaze, fixation duration, and scanning patterns, the technology identifies unique visual rhythm signatures associated with ADHD cognition.

These patterns reveal how ADHD minds process information differently, particularly in attention shifts between details and broader concepts. The system collects data through standard webcams or front-facing smartphone cameras, requiring no specialized equipment.

The algorithm compares individual visual rhythms to a database of more than 15,000 participants with and without ADHD diagnoses. This data-driven approach reduces subjectivity present in traditional questionnaire-based assessments, which often overlook adult ADHD due to learned coping strategies.

The Science Behind Visual Rhythms

Visual rhythm detection is rooted in research from the University of California’s Neurocognitive Innovation Lab, where scientists found that ADHD brains process visual information in distinct patterns. These include more frequent but shorter fixations on details and unique transitions across screen elements.

Dr. Elena Rivera, lead researcher on the project, explained that ADHD eyes “dance across information in ways that reflect how the ADHD brain connects ideas.” This pattern highlights a different processing style rather than a deficit.

The AI utilizes a deep learning neural network trained to spot nuanced differences between neurotypical and ADHD visual engagement. Validation studies recently showed 89% accuracy in identifying previously diagnosed adult ADHD. It outperformed traditional screening methods.

Benefits Beyond Diagnosis

Unlike conventional assessments focused solely on diagnosis, this tool provides actionable insights into individual cognitive styles. Users receive personalized reports outlining attention strengths, including rapid context-switching and pattern-recognition abilities often seen in ADHD.

Organizations adopting the technology have reported a 32% increase in tailored workplace accommodations for neurodivergent employees. These targeted adjustments have led to improved performance and reduced burnout among ADHD professionals.

Alex Chen, a software developer involved in early trials, stated, “This isn’t about labeling people. It’s about unlocking potential.” After reviewing his visual rhythm profile, Chen rearranged his workspace and communication tools to better align with his natural attention flow, leading to higher productivity.

Real-World Applications

Major companies such as Adobe and Shopify have launched pilot programs integrating visual rhythm analysis into workflow design. These initiatives use data to shape neurodivergent-friendly interfaces that adapt to individual cognitive patterns rather than enforcing one-size-fits-all designs.

Healthcare providers have begun using the tool as an initial screening resource, reducing the standard adult ADHD diagnostic process from months to weeks. While the technology does not replace clinical evaluation, it provides objective data that enables clinicians to make more informed assessments.

Educational institutions are also exploring its use to help adult learners optimize their study environments. Dr. Samir Patel, director of accessibility at Northern State University, commented that understanding one’s visual rhythm “transforms what might be labeled as distractibility into a navigable cognitive map.”

What Happens Next for the Technology

The development team will release a mobile app version in Q4 2023, making the assessment accessible directly to consumers. This version will include personalized recommendations for optimizing digital environments based on visual rhythm profiles.

Clinical integration partnerships with five major healthcare systems are scheduled to begin in January 2024. These collaborations will establish standardized protocols for using visual rhythm data alongside traditional diagnostics.

Research is underway to broaden the technology’s application to identifying other neurocognitive conditions through visual patterns. Scientists are currently gathering data on visual signatures linked to anxiety, dyslexia, and autism spectrum conditions, with early findings suggesting similarly distinctive patterns among these groups.

Conclusion

AI-powered visual rhythm analysis introduces a strengths-based perspective for understanding adult ADHD, fostering greater self-awareness and workplace inclusion. Its early use among companies and healthcare organizations suggests promise for faster, more personalized support. What to watch: A consumer app release is expected in Q4 2023, followed by standardized clinical protocols and expanded neurodivergence detection in early 2024.

Leave a Reply