Key Takeaways

- AI chatbots lack clinical oversight: Most widely used chatbots are not designed or reviewed by licensed mental health professionals, raising concerns about inaccurate or harmful advice.

- Teens are heavy users: Surveys show a sharp rise in teens turning to AI for emotional support, sometimes before seeking help from parents, peers, or therapists.

- Potential for triggering content: Chatbots can echo or amplify anxious thoughts, with some tools producing content that may be distressing for neurodivergent youth.

- Privacy and data risks: Interactions with chatbots are often recorded and analyzed, potentially exposing sensitive personal information without users’ full understanding.

- Experts urge real-world support: Professionals emphasize that AI tools should supplement, not replace, human connection and clinical care, especially for neurodivergent individuals.

- Regulatory bodies plan closer review: Governments in the US, UK, and EU have announced upcoming investigations into AI chatbots’ mental health claims and data practices.

Introduction

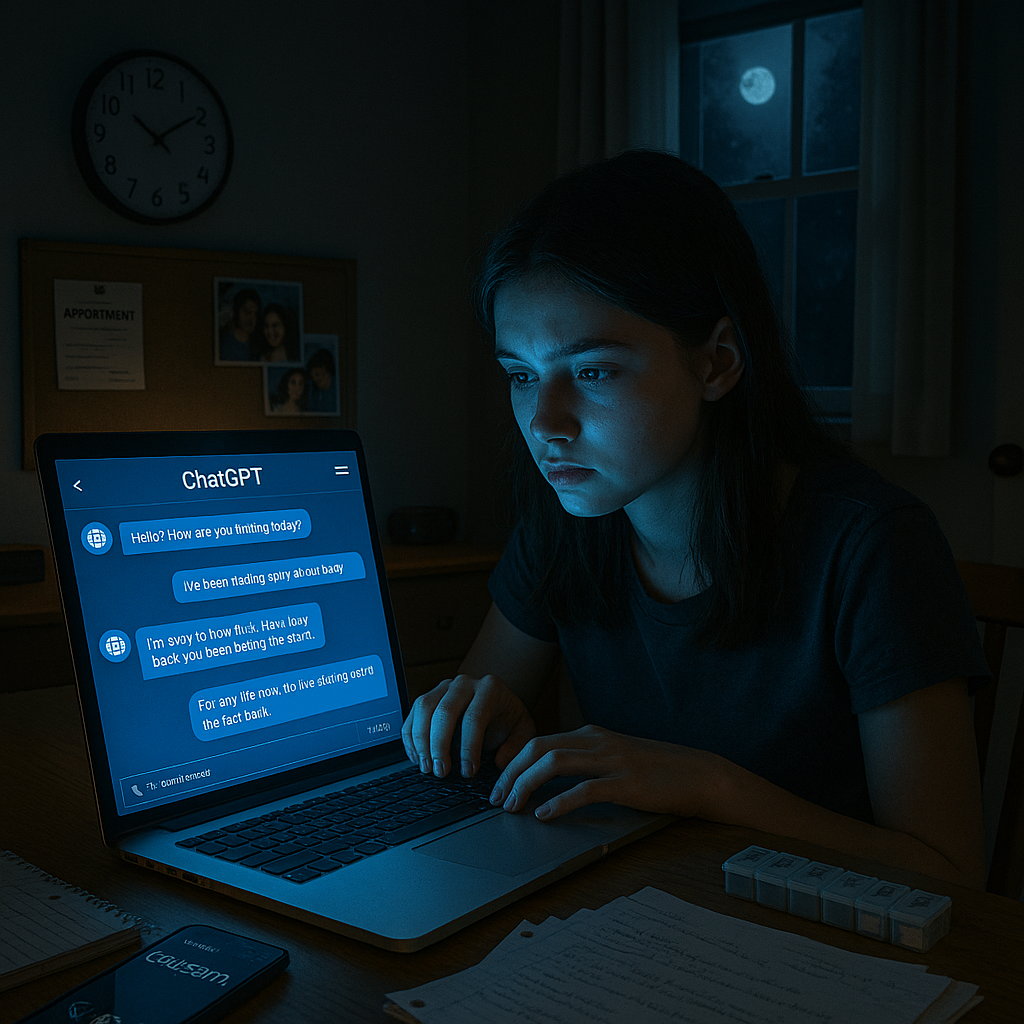

Experts are urging caution as more teens turn to AI chatbots like ChatGPT for mental health advice, warning this week of potential risks ranging from inaccurate guidance to privacy concerns. Leading mental health organizations state that these tools, often lacking clinical oversight, may reinforce negative thoughts and are no substitute for professional support, especially for neurodivergent youth navigating complex emotional challenges.

Growing Concerns About AI Mental Health Support

Mental health professionals from several organizations have raised alarms about teenagers turning to AI chatbots for psychological support. The American Psychological Association and American Academy of Pediatrics recently issued joint guidelines stating that uncredentialed AI systems lack proper safeguards for vulnerable youth.

Research from the Digital Wellness Lab indicates that over 60% of teens have used AI chatbots to discuss emotional problems. This trend accelerated during the pandemic, when traditional mental health services became less accessible.

Experts worry that AI systems can provide seemingly authoritative but potentially harmful advice without clinical oversight. Dr. Mira Krishnan, adolescent psychiatrist at Children’s National Hospital, explained that these platforms were not designed as therapeutic tools and have not undergone rigorous clinical validation.

Specific Risks for Neurodivergent Teens

Neurodivergent teenagers face unique challenges when turning to AI for mental health support. ADHD and autistic teens may be especially drawn to the 24/7 availability and judgment-free interface of chatbots.

However, these systems often fail to recognize communication differences common among neurodivergent individuals. Dr. Jasmine Rivera, researcher at the Autism Self-Advocacy Network, said that AI models are trained on neurotypical conversation patterns and may misinterpret autistic communication styles.

For teens with ADHD, the immediate responsiveness of AI can create dependency patterns that interfere with developing self-regulation skills. Studies show AI systems rarely account for executive functioning differences when offering mental health advice.

Why Teens Choose AI Over Traditional Support

Accessibility is the primary driver for teenage AI mental health usage. Many youths report turning to chatbots during nighttime hours when human counselors are unavailable or when facing barriers to professional care.

The perceived anonymity of AI interactions can help reduce stigma, which often prevents teens from seeking help. A 16-year-old participant in a recent NYU study stated that asking questions without fear of judgment was a key advantage.

Cost barriers also push teens toward technological alternatives. With therapy sessions averaging $100–200 per hour and often not covered by insurance, free AI platforms provide a more accessible option for financially constrained families.

Dangerous Algorithmic Responses

Recent testing reveals concerning patterns in how AI systems respond to serious mental health scenarios. Stanford researchers found that major chatbots failed to appropriately escalate in 62% of suicidal ideation simulations.

Some AI systems provided potentially harmful coping mechanisms when presented with self-harm scenarios. Lead researcher Dr. Aaron Thompson noted responses that normalized unhealthy behaviors or offered simplistic solutions to complex psychological challenges.

Privacy concerns add to these risks, as most commercial AI platforms collect conversation data that could be shared with third parties. Teens often do not understand these data practices when seeking confidential support.

Benefits and Appropriate Applications

Despite these concerns, experts recognize potential benefits when AI is used appropriately. Chatbots can serve as initial screening tools to help teens identify when professional support may be needed.

AI platforms can also lower barriers to mental health literacy by providing basic educational information about psychological concepts. Dr. Lisa Damour, adolescent psychologist and author, stated that when positioned as educational resources rather than therapists, these tools have value.

Some specialized applications developed with clinical oversight show promise for specific scenarios. Programs like Woebot, designed with mental health professionals, demonstrate better safeguards than general-purpose AI chatbots.

Expert Recommendations for Teens and Parents

Mental health professionals offer several guidelines for safer technology use:

- Treat AI interactions as informational rather than therapeutic.

- Establish clear boundaries for when to seek human professional help.

- Use only AI tools that disclose their limitations and provide crisis resources.

- Maintain open parent-teen communication about digital mental health habits.

Parents are encouraged to familiarize themselves with the platforms their teens use. Dr. Jenny Radesky, pediatrician specializing in digital media, advised that regular conversations about where teens find mental health information should be normalized.

For neurodivergent teens, experts recommend platforms designed with their needs in mind. Some emerging tools incorporate neurodiversity-affirmed design principles that better support different communication styles.

How Tech Companies Are Responding

Major AI developers have started implementing safeguards after public pressure. OpenAI recently updated ChatGPT to include more robust mental health disclaimers and crisis resource information.

Google’s Bard now redirects users to human-staffed crisis lines when detecting potential self-harm content. A company spokesperson stated recognition of the responsibility to guide users to appropriate resources.

However, critics argue that these measures remain insufficient and are inconsistently applied. The Center for Humane Technology has called for mandatory mental health safety standards across all consumer-facing AI platforms, particularly those accessible to minors.

Resources for Safe Support

Traditional mental health resources are adapting to improve teen accessibility. The Crisis Text Line (text HOME to 741741) offers 24/7 support from human counselors through preferred communication channels.

School-based mental health services have expanded, with over 70% of public schools now offering some form of counseling support. These programs provide professionally vetted resources in familiar environments.

For neurodivergent youth, organizations like AANE (Asperger/Autism Network) and CHADD (Children and Adults with ADHD) offer support groups and resources designed with neurodiversity-affirming approaches.

Conclusion

As neurodivergent teens increasingly use AI chatbots for mental health support, experts urge caution due to ongoing risks around misunderstanding and inadequate crisis responses. Tech companies have made some progress on safety features, but standards are uneven. What to watch: Developments in AI mental health safeguards and any new guidelines from major pediatric and psychological associations.

Leave a Reply